Welcome to the third post in my four part series. This is where things start to get more fun. We’ve cleared most of the business decisions and it’s now time to get technical and discuss some hardware options. If you haven’t yet followed along, please see my prior two posts Private Cloud Architecture – pt 1 and Private Cloud Architecture – pt2 – Selecting Providers.

Network Hardware Decisions

There’s a lot of hardware necessary in each datacenter to provide full redundancy. In addition to having redundant hardware itself, ensure all equipment is redundantly wired so that there is no single point of failure. Make sure you don’t forget to order the redundant PSUs for all network equipment (for some reason many vendors don’t include this by default).

As a personal preference, I also avoid networking hardware that uses a shared chassis. I don’t have a clear view on the likelihood of a chassis failure, while I’m sure its very rare, it is still non-zero. I prefer going with a shared-nothing architecture when it comes to hardware.

Routers

Routers are used to quickly route packets from one physical or virtual interface to another. They do so at a per-packet level, without any sort of state or session tracking. Since routers do not track traffic flows, when the route the traffic takes from point A to B changes, any established connections won’t drop as there is no stateful session tracking occurring. This can be a stumbling block for those attempting to use a firewall as a router (which uses stateful connection tracking) which will cause drops as routes change, such as if a P2P circuit goes down and traffic is then redirected through a different datacenter to reach the same ultimate endpoint.

Forwarding Plane

Routing information is exchanged using external routing protocols like BGP, as well as Interior Gateway Protocols like OSPF. It is extremely important for the router to have a separate control plane from their data or forwarding plane (verify!). Typically the control plane is a general purpose CPU like an Intel Xeon processor, whereas the forwarding plane is always custom ASIC.

There are routers out there that aren’t real routers, meaning they do everything on the general purpose CPU … avoid these. Stick with one of the large router vendors like Juniper or Cisco, and work with a Sales Engineer at the vendors to determine the caveats with various models and ensure all traffic is forwarded using an ASIC. The ASIC on a router can look up the destination of a packet at wire speed, even with millions of possible routes in its forwarding table using TCAM for lookups. Lookups on general purpose CPUs can be hundreds of cpu cycles as the routing tables grow thus adding a significant amount of latency. In addition to forwarding lookups, these custom ASICs should also support things like BFD timers and GRE encapsulation without needing to involve the host CPU.

RIB and FIB

The RIB is the routing database, or all the possible routes to any given destination, regardless if it is the best possible route or not. The FIB is the forwarding database where the current best/shortest routes from the RIB are programmed into the forwarding plane ASIC. The current internet tables are almost 1M IPv4 routes and almost 200k IPv6 routes, but keep in mind that IPv6 routes will consume more of the available FIB space. You need to ensure your router not only has sufficient FIB for now, but also for at least the next 5 years. Many router datasheets aren’t clear on these metrics and you should talk to your SE with the router vendor to confirm.

RIB size is usually a little more flexible as this is typically managed in the control plane and just uses RAM on the host as the tables grow. In this architecture, with 3 upstream providers plus multiple peering sessions with each provider, the RIB size will be many multiples of the FIB.

Other

Avoid using any sort of built-in “clustering” technology for your routers that make them act as a single unit. You are always going to be better off allowing routing protocols to do what they do. Use full mesh iBGP across all routers. Use aggressive BFD timers on all routing protocols. You will achieve sub-second failovers without needing any specialized tooling. Though clustering solutions may seem desirable from a management standpoint, it is a complex technology and often things can go sideways due to no fault of your own, especially during upgrades even if the vendor claims to support In-Service Software Upgrades (ISSU).

Since all routers act completely independently and operate with industry-standard protocols, there’s no reason they need to be the same model or even from the same vendor … except for the fact that its a lot easier to manage if they’re all identical. Being independent and vendor-neutral may also be desirable if you have some existing hardware you wish to repurpose that is still sufficient for your needs.

My personal preference here is the Juniper MX series routers. In particular the MX204’s are very capable at a good price point, however Juniper has recently announced their end of life with no similar offering as a replacement. Its unclear if they have a new model in the works, if the chip shortage hit them hard, or if they realized this model was simply cannibalizing sales of their pricier units. Given the price points, I’d personally probably research if the ACX series routers would meet the solution needs, at least until Juniper introduces a new MX router series that is price competitive. You can expect to pay around $30k per router with 5 years of support and warranty; with 6 total that comes to about $180k.

Update: MX204 EOL Revoked, I guess I wasn’t the only one who wondered what was going on with this 🙂

Switches

At a high level, a switch forwards packets from one directly connected device to another at wire speed based on MAC addresses. Switches can vary greatly in feature sets and port speeds. In this private cloud environment, I am recommending a total of three switches per datacenter. Two switches will be core switches where all servers and network devices redundantly connect via high speed links, then one as a management switch where management interfaces such as IPMI can connect natively using lower speeds and RJ45 ports. There are lots of new technologies out there your switches are likely to support like VXLANs and EVPNs, but I am not advocating for their use in this architecture.

Core Switches

The core switches need to only support a few technologies:

- VLAN support. VLANs are used to logically separate networks for security and efficiency. Low end switch vendors may just call this a feature of “managed” switches vs “unmanaged”.

- LACP support. LACP is also known as 802.3ad and is used to aggregate multiple links into a single virtual link for both load balancing and failover. Some vendors may call this a PortChannel.

- MLAG support. MLAG is an extension on top of LACP which allows ports from different switches to participate in the same LACP virtual link. Some switch vendors may generically include as part of their “stacking” technology.

- 100G interfaces. 100G technology has recently become economical and the technology stack I am proposing will make good use of this technology. 100G ports can often be split into 2x50G ports or 4x25G ports. At a minimum we need 4x100G ports (capable of being split into 2x50G ports each), with the remainder being 25G ports, per switch. If you can afford more ports, by all means go for it.

I’ve found the Mellanox/NVidia SN2010 switches running Cumulus Linux to be at a good price point for the supported features and very manageable, see my previous article: Adventures with Cumulus Linux & Mellanox 100G Switches. As long as the above required feature set is available, any reputable vendor should work. These SN2010 switches do have a very limited number of 100G interfaces, so I split these into 2x50G ports in this architecture.

As for basic switch configuration, this is the general overview:

- Interconnect the core switches together with redundant cabling. As a rule of thumb, the aggregate speed of the ports interconnecting the switches should equal the single highest speed active port in the network. So if you split your 100G ports into 2x50G (making 50G your highest port speed), then your switch to switch connection is adequate at 2x25G.

- This need not be at the highest link speed available on the switch as every other system will be dual-connected and traffic will not traverse the switch-to-switch links at all for these dual connected devices. Typically only IP Transit and P2P Link traffic will use the switch-to-switch links unless there is another link failure with a dual-connected system.

- Connect each router, firewall, management switch, and hypervisor to a port on each core switch (MLAG). Routers and Firewalls should be interconnected using at least the speed of the highest performance IP Transit or P2P Circuit. Hypervisors should use at least dual 50G interconnects.

- Be liberal with VLAN usage, create a vlan for each:

- IP Transit provider

- P2P Circuit

- BGP Peers

- External subnets/ip blocks

- Internal networks based on role and security levels

Expect to pay around $7k per switch with 5yrs support and warranty. In this architecture, you will need 2 per datacenter (6 total) totaling around $42k.

Management Switch

A management switch is used to connect to management ports on the various networking and hypervisor devices for out of band management purposes. These ports are typically 100Base-T or 1GBase-T RJ45 ports and it is simply not cost effective to attempt to connect these to our core switches using transceivers (infact, a lot of high end switches using transceivers won’t negotiate down to 100Base-T). Management ports on servers and other equipment are singular with no option for redundant connections; therefore there is no need for a second management switch.

The management switch also doesn’t need to even support vlans, but it does need to support LACP in order to connect to each of the core switches (likely you won’t find a switch without vlan support but with LACP support). Typically all ports on the management switch will be in a single vlan configured in access mode as it is a best practice to segment as all management interfaces into a single non-public network.

So our target here is a 24port or 48port RJ45 1Gbase-T switch, but will probably have a couple of higher-speed uplink ports that can be used to connect to your core switches (but this aspect isn’t required due to the low bandwidth needs of management ports). You still want to go with a good vendor with a good reliability record, but this shouldn’t be an expensive switch. Expect to pay less than $4k for a quality switch with 5 years of warranty and support. In this architecture you will need 1 per datacenter (3 total) totaling around $12k.

Firewalls

Unlike routers, most firewalls operate using primarily the host CPU. Only extremely expensive firewalls tend to use ASICs, and then, usually only for their Next-Gen firewall inspection (a bandwagon I have yet to hop on). Because of this, there are far more firewall vendors. You can choose from legacy vendors like Juniper and Cisco, or go with some of the more modern competitors like Palo Alto, Fortinet, SonicWall, or Barracuda.

Firewalls focus on sessions or flows (unlike routers that focus on packets). They will look up a route when a session is first initialized and record the session to its lookup table and use it to automatically match any future packets. Any packets that don’t belong to a known session are dropped.

When looking at firewall specifications, you need to look at overall firewall (not NextGen) throughput as well as VPN throughput. In general, you will want to have VPN throughput matching your P2P link speed, and your firewall throughput to be around the aggregate of your uplink IP Transit speeds. Your firewall choice must also support clustering technology for failover (or better yet, load balancing). Also, look for different metrics, such as packet size used to generate the performance measurements, as a 1400byte packet size may be more misleading than an iMIX metric.

One other thing to keep in mind is never use any UI provided by a firewall. Seriously, just don’t. Get comfortable with the command line. The UI typically is extremely limited, provides no real change control support for audit purposes, and will have a huge security footprint. If the router has a UI, and doesn’t let you disable it completely, move on to a different vendor. Also, don’t use any sort of global management solution provided by the router vendor for the same reasons (e.g. Junos Space).

At my company, we’re a Juniper shop, so the SRX series firewalls were the obvious choice, however we’ve definitely had our difficulties. It seems like our upgrades (which are required at least quarterly) aren’t as smooth as we’d hope. Often an upgrade requires a full outage regardless of if the advertised system capabilities state that ISSU (In Service Software Upgrade) are supported. Also, our current firewalls (Juniper SRX 1500) are a bit underpowered, they do not meet the recommended performance requirements for site to site VPNs. We’ve researched upgrading to the 4k series, however they are getting long in the tooth, so we probably can’t entertain that expense until their next hardware refresh (especially considering the short lifecycle of the MX204 routers).

I’d personally like to explore Palo Alto if I had the time, I seem to hear of fewer major security issues with them and they are generally well regarded.

Expect to pay around $20k per firewall including 5yrs support and warranty, totaling $120k across all datacenters.

Optical Line Protection Switch

An optical line protection switch is a device that uses a physical mirror controlled electronically to switch light from one fiber pair to another. The OLP will monitor light coming from the transceivers on your switches, and if you have a switch failure, it will automatically redirect light to the other switch within a few seconds.

Without an OLP, switch upgrades or an unplanned switch outage would result in any optical links being down for the duration of the outage, taking out at least one if not more providers. The only other countermeasure to such an issue would be to purchase redundant links from each provider, but that would be extremely cost prohibitive.

While it may seem that an OLP adds a single point of failure, it is very much a physical point of failure (like a cable) and not an electrical one. Since an OLP uses a physical mirror to redirect light, even in the event an OLP has no power, the mirror is guaranteed to stay in the same state that it last was.

The only OLPs I’ve had experience with are from CTC Union, and I have had no issues. While I would like the automatic switch time to be sub-second, these do switch within 5s which is acceptable in the unlikely event of an unexpected failure. Other vendors I’ve found include FS.com and NTT Global. Expect to pay around $5k per OLP chassis + 3 modules per datacenter, for a total of $15k.

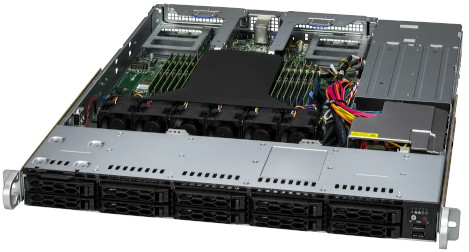

Hypervisor hardware

When selecting hypervisors, with this architecture, I opt to go with more hypervisors rather than beefier. This solution uses a minimum of 5 hypervisors. I’m partial to the latest generation AMD EPYC processors, which have high core counts in a single socket. We’re not using specialized accelerators or GPUs so do not need a large number of PCIe lanes. Sticking with a single socket means a less complex system, reduced cost, and if licensing software “per socket”, reduced licensing costs as well. It also provides additional redundancy, and having at least 5 nodes per datacenter allows us to utilize additional disk subsystem clustering options for efficiency.

A few base requirements here are:

- Fill enough RAM slots to ensure the best performance based on supported channels, but if possible, leave half the slots unfilled for future expansion. In general that means a target of at least 512GB to 768GB per node.

- Make sure there are 8-10 NVMe drive slots per node, and fill half of them with the largest enterprise grade disks you can (I personally like the Intel/Solidigm D7-P5510 7.68TB drives), leaving the rest for future expansion.

- Make sure to have a dual port 100Gbps NIC per node (such as the Mellanox/Nvidia MCX516A-CDAT)

- Use at least a 32 core CPU with a high base frequency (3GHz+).

- Use RAID-1 for boot disks. You typically won’t want to consume your NVMe slots for this, so it is recommended to use a PCIe dual port M.2 RAID controller like the MegaRAID 9540-2M2 built for this purpose.

I’ve personally found SuperMicro to offer the best bang for the buck, undercutting Dell and HPE by at least 20% for similarly spec’d units. I’ve never had any reliability issues with SuperMicro.

If you’ve been following along with the above recommendations, that will give you 160 CPU cores, 2.5TB-3.8TB RAM, and 192TB NVMe storage (raw, will be reduced when we add replication) per datacenter.

Of course everyone’s requirements are different. What if, for instance, you need 8PB of storage per datacenter? No problem, throw in a SAS3 HBA to each hypervisor and pair it with one or more JBOD enclosures (daisy-chained) per hypervisor. Of course, this will probably triple your overall hypervisor costs, but be significantly cheaper than renting the space in the public cloud.

Expect to pay around $20k per hypervisor fully outfitted. This architecture requires 15 hypervisors, so that brings us to around $300k. Of course if you need more CPU, RAM, Disk or nodes, adjust accordingly.

Other hardware devices

Finally, we come down to remaining bits, though still important.

You’ll probably want an NTP server per datacenter that utilizes a physical node. Virtual Machines just aren’t a great choice as a time keeper due to various scheduling skews. Time is important, you want to split this out. This doesn’t have to be anything fancy, in fact, a low end 1U running something like a Xeon-D processor would be fine. Expect to pay around $1k per NTP server.

Likewise, you probably want an emergency backdoor, which means you’ll need a machine you can ssh into to access the serial consoles of your networking equipment. You’ll need at least 7 USB ports to interconnect all the recommended network hardware and some USB to serial console cables (find ones with FTDi chips). This too does not need to be anything fancy and will probably be substantially similar to the NTP server, so lets estimate $1k per datacenter.

Then we have the odds and ends like PDUs (I like the TrippLite 208v local metered PDUs), transceivers (fs.com has proven reliable for me), and cables. Lets assume that will be around $2.5k per datacenter.

Lets round these components and call it $14k total for all datacenters combined.

Conclusion

Hopefully this post has given you some insight into the various hardware components necessary for setting up a private cloud of your own. Though I’ve listed some vendors I’ve used in the past here, the decision is up to you, go with what you’re familiar with.

Now the part most readers are interested in, total hardware costs:

- Routers: $180k

- Switches: $42k + $12k = $54k

- Firewalls: $120k

- OLP: $15k

- Hypervisors: $300k

- Other: $14k

That comes to a grand total of $683k, wow, that’s a big number. But if we amortize that over 5 years, that comes out to $11.5k/mo, a much more reasonable number given what we’re getting. Of course, a lot of this hardware will last longer than 5 years, as long as it works reliably and is receiving security patches, there’s no mandate to replace any of it if it still suits your needs.

In the next post, I’ll be discussing configuration and software details of the private cloud including routers, firewalls, and hypervisors.

The next post in this series is now available: Private Cloud Architecture – pt 4 – Routing & Software