Overview

This is part 1 of a 4 part series discussing the architecture of private clouds and when they may be an appropriate choice over public clouds. A private cloud is a virtualized environment running on your own hardware, not a public cloud like AWS, Azure, or GCP. The architecture described in this series would be a good fit for a vast majority of the businesses which provide some sort of services to an end user that needs high availability and good performance.

Private vs Public

There are many reasons why a private cloud is desirable over a public cloud, but this choice is not for everyone. If you have in-house technical expertise, and are running a significant number of virtual machines already (or plan to), or you have high bandwidth requirements, a private cloud might be the right choice.

It is often hard to calculate an accurate cost of public cloud services, as you are not only charged by the hour per VM based on the size, but you are charged for storage and data movement in and out of each VM. Just from a cost standpoint alone, counterintuitively, it may be worth building your own private cloud. I say counterintuitively because this seems not to be the common assumption. These companies providing cloud services are doing it for profit; their bulk purchase power doesn’t actually get passed on to you as a cost savings. Public cloud providers also provide many value-added services, which act as lock-ins to make it more cost-prohibitive to move away from them. I’d strongly suggest if you decide to use a public cloud to avoid any provider-specific technology as you may need to move away from the chosen provider in the future.

There are other reasons a private cloud may be beneficial, such as if you need guaranteed latencies, specialized hardware (e.g. HSMs), or simply for the uptime benefits. The environment for your business does not need to be as large and complex as a public cloud provider which can often lead to less downtime (who hasn’t heard of AWS taking down half the internet because their Eastern US Cloud region went down — granted, that’s a bad design by the companies using AWS… perhaps a topic for another day).

Now you may be asking, when is a private cloud not the right choice? The answer to that is if you are very small (lets says < 100VMs), don’t have the technical expertise in-house, or you need unlimited scaling during times of infrequent high load (for the older readers, remember the Slashdot effect?), then yes, by all means you should probably use a public cloud.

It is important to remember that the number of VMs can explode drastically when complying with most security standards combined with high availability. Most security standards for deployments of services have a strict mandate on a single purpose per machine (or VM), that means not co-hosting different services on the same system. Then you need at least 2 instances per service in a single data center to provide local high availability, then again replicating that in at least one other datacenter for global high availability. In the public cloud, you can see how this might become expensive very quickly.

High Level Architecture

When you look at a public cloud, their architecture consists of multiple Regions (dispersed geographic areas such as US East vs US West), Availability Zones (datacenters within a region), Racks, and Nodes. Not much public information is available on how much is shared at any level in the public cloud. Some information suggests deploying in multiple availability zones is sufficient, but evidence from various public outages suggest deploying in multiple regions is safer.

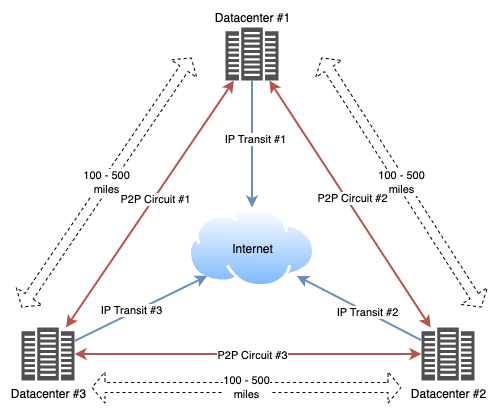

The architecture I would recommend to most businesses would be 3 datacenters in a roughly equilateral triangle, geographically separated by at least 100 miles, but less than 500 miles. These datacenters would be third-party colocation facilities providing space, power, and house multiple telco providers. Such an architecture would be somewhere in-between the concept of each datacenter being a Region and an Availability Zone. This architecture would facilitate low-latency interconnects for high-availability scaling with synchronous replication, while also providing sufficient redundancy in the case of a geographic event (hurricane, earthquake, man-made disaster).

Each datacenter would be interconnected with a high-speed, low-latency circuit (full mesh), with a maximum latency of 10ms per circuit. Each datacenter would also be connected to at least one Tier 1 Internet provider, but may consist of other connections, such as to an Internet Exchange Point (IXP) if available.

This architecture assumes low latency between datacenters is more important than latency from an end user to the business network, which is the more likely case. In the event that latency to the end users is important, it would be recommended to replicate this triangle in multiple dispersed geographic regions. Be aware that synchronous replication will not be possible across such dispersed geographic regions due to the significantly higher latency and other technologies like eventual consistency might be required. In such a scaled deployment, you should be cautious in regards to routing as suboptimal end-user routes could be chosen and route to a non-optimal datacenter, so it may be necessary to use the same Internet Providers in each dispersed region, different ASNs to separate the dispersed regions, or geo-DNS (yuk!).

Prerequisites

There are a few prerequisites any company must meet before they can begin architecting a private cloud environment. These prerequisites might be solvable prior to actual implementation, but can require a significant amount of up-front time.

Technical Expertise

First and foremost, you need to have the in-house technical expertise in configuring routers, firewalls, and servers. A deep understanding of BGP, OSPF, VLANS, and IPSec are also required. Even mundane things such as cabling types and fiber transceiver types can be stumbling blocks. If you don’t already have this in-house expertise, it is not something you can outsource in a cost effective way. It can also be extremely difficult to hire the necessary staff to fill this role as without the pre-existing in-depth knowledge; you may hire candidates that simply aren’t qualified and not know it until you’ve expended a lot of time and money.

Internet Number Registration

Depending on your operating region, you need to have an account with an Internet Number Registrar. This may be ARIN in the US, RIPE in Europe, APNIC in Asia, AFRINIC in Africa, or LACNIC in Latin America.

You must first obtain an Autonomous System Number (ASN) from your registrar so you can advertise your routes on the public internet. You must also obtain IP address blocks to advertise.

For IPv6 address blocks, just apply with your Internet Number Registrar with your requested block size, typically most organizations will go with a /44, with a /48 being the minimum block size able to be advertised (a /44 is 16 /48 blocks).

For IPv4 address blocks, these are not easily available if you don’t already have some. Some registrars like ARIN have a reserve of IPv4 blocks to facilitate IPv6 transition, so you might be able to ask for a single /24 (the minimum advertisable size) under this program. Otherwise, you either need to get on a waiting list which are typically filled quarterly with blocks that have been returned (e.g. defunct entities), or purchase from an IP broker (expensive). If you have time, the waiting lists appear to be fairly effective as most requests appear to be filled. With the architecture described here, it would be recommended to have a /22 (a /22 is 4 /24 blocks), which would allow you to dedicate a /24 for each datacenter and use one /24 for AnyCast.

Don’t forget to use RPKI to cryptographically associate your IP address blocks with your ASN. It is also a good idea to use IRR to describe your routing policies. Finally, you should also set up a PeeringDB account and record the appropriate information in there. The MANRS project is a good resource for how to be a good network operator to reduce threats.

Conclusion

This concludes the first part of our series. Future posts I will go over the decision making processes for choosing providers, hardware vendors, and software along with estimated costs of each component. I will also talk about architecture choices I have made when deploying private clouds.

The next post in this series is now available: Private Cloud Architecture – pt2 – Selecting Providers